An MVP Can Accelerate Agile Success

Your Agile team just completed a three-month sprint cycle, delivering a feature-rich product update with everything stakeholders requested. The launch arrives, and then... crickets. Users don't engage. The features sit unused. This scenario plays out daily and is entirely preventable.

The culprit isn't Agile methodology itself—it's the absence of validated learning before heavy investment. This is where the Minimum Viable Product (MVP), popularized by Eric Ries in The Lean Startup, becomes a strategic necessity. An MVP approach transforms how teams work, shifting from assumption-driven building to evidence-based iteration.

In this article, you'll discover how to leverage MVP thinking within your Agile framework, avoid common misconceptions, and implement practical strategies that lead to better products with less waste.

Quick Start: 5 Actions You Can Take Today

- Write your hypothesis clearly: "We believe [user segment] experiences [problem] and would value [solution]"

- Define success metrics before building: Set specific targets for activation, engagement, or retention

- Apply MoSCoW prioritization: Categorize features as Must/Should/Could/Won't have for your next release

- Add validation criteria to user stories: Include "We'll know this works if [measurable outcome]"

- Reframe your next sprint planning: Ask "What do we need to learn?" instead of "What features should we build?"

Understanding the MVP in Agile Context

A Minimum Viable Product is the smallest version of a product that can be released to real users to validate core assumptions and generate meaningful learning. The emphasis is on both "minimum" and "viable"—scaled down enough to build quickly, yet functional enough to provide genuine value and elicit authentic user feedback.

MVP vs. Related Concepts

Within Agile methodology, the MVP serves as the foundation for iterative development. While Agile emphasizes working in sprints and delivering incremental value, the MVP provides the strategic direction for those sprints. It answers the critical question: "What's the minimum we need to build to start learning?"

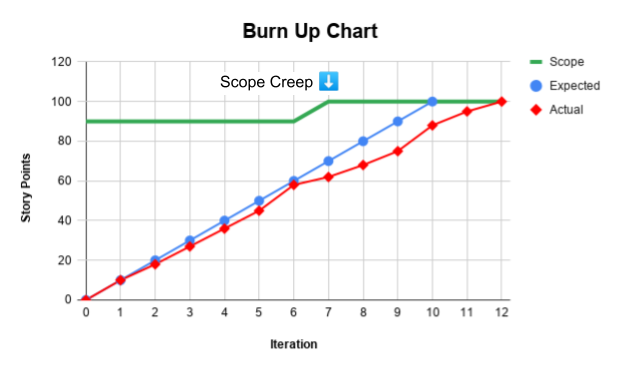

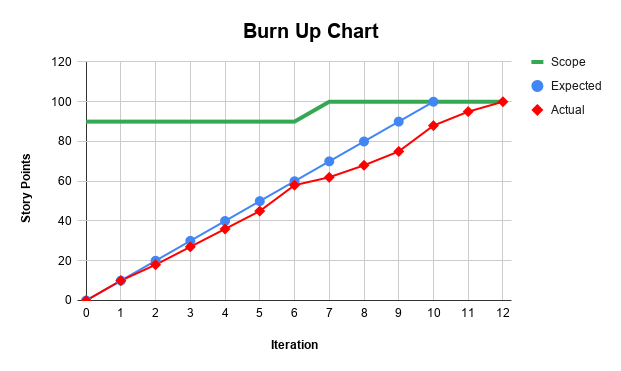

The relationship between MVP, sprints, and iterative development creates a powerful feedback mechanism. Your first MVP might take several sprints to build, but once launched, each subsequent sprint focuses on validated improvements based on real user data. This creates the build-measure-learn cycle—the foundation of evidence-based product development. Rather than planning features based on assumptions, you're building a roadmap informed by evidence gathered from each iteration.

The Strategic Value of MVPs

The strategic case for MVPs in Agile development rests on five interconnected benefits that compound over time.

- Risk mitigation stands as the most immediate value. Every feature you build represents an investment—in engineering time, design resources, opportunity cost, and organizational focus. Without validation, you're gambling that investment on assumptions. An MVP approach transforms this gamble into a measured experiment. By testing your riskiest assumptions first with minimal investment, you can pivot or persevere based on evidence rather than intuition. Dropbox famously demonstrated this[1] by launching with a simple explainer video before building complex sync technology, validating demand before major development investment.

- Faster time-to-market emerges when teams embrace true minimum thinking. Traditional product development often delays launch until feature completeness, sometimes taking years. MVP-driven Agile teams can launch in weeks or months, immediately starting the learning process. Spotify didn't wait to build a perfect music streaming service with every conceivable feature; they launched with core functionality and iterated based on user behavior. Each month of earlier market entry is a month of learning advantage over competitors.

- Customer-centric development shifts from theoretical to actual. Product managers frequently say they're customer-focused, but without releasing MVPs, they're focused on customer personas and assumptions, not real customers providing real feedback. An MVP forces direct confrontation with user needs. Instagram began as Burbn[2], a complex check-in app with many features. User data revealed people primarily used the photo-sharing functionality, leading founders to strip everything else away and rebrand. That pivot, driven by MVP learning, created a billion-dollar company.

- Resource optimization becomes possible when you allocate budget and talent based on validated priorities rather than planned roadmaps. Instead of staffing a full team to build an entire vision, you can run leaner initially, scaling resources only for features that prove their worth. This is particularly valuable for organizations with limited resources or startups managing runway carefully.

- Competitive advantage accrues to teams that learn faster than competitors. In rapidly evolving markets, the winning product isn't necessarily the one with the most features—it's the one that adapts most effectively to changing customer needs. MVP-driven Agile teams complete learning cycles while competitors are still in planning phases, creating an intelligence gap that widens over time.

Common MVP Misconceptions

Despite widespread discussion of MVPs, several persistent misconceptions undermine their effectiveness in Agile teams.

The first and most damaging myth is that "MVP means low quality." This fundamentally misunderstands "minimum" and "viable." Minimum refers to scope, not craftsmanship. A viable product must work reliably and provide genuine value within its limited scope. If your MVP is buggy, confusing, or frustrating, users will abandon it before you gather meaningful feedback. The car analogy is helpful here: an MVP isn't a car with three wheels and no seats; it's a skateboard—different scope, but fully functional for its purpose. Your MVP should delight users with what it does, even if what it does is intentionally limited.

Another misconception is that "MVP is just about speed." While MVPs do accelerate time-to-market, speed is a means, not the end. The true purpose is validation—testing whether your product solves a real problem for real users in a way they'll adopt. Rushing to launch something poorly conceived defeats the purpose entirely. Speed matters because faster launches mean faster learning cycles, but learning is the actual goal.

Many teams also believe "one MVP is enough." This reveals a fundamental misunderstanding of iterative development. The first MVP validates your core hypothesis, but it's just the beginning. Each iteration is, in a sense, a new MVP—the minimum next step that tests your next most important assumption. Agile methodology thrives on this continuous cycle of building, measuring, and learning. Treating MVP as a one-time milestone rather than a mindset leads teams back to waterfall thinking.

Finally, teams sometimes assume "MVP works for every product." While the MVP approach is broadly applicable, certain products—particularly in highly regulated industries or those requiring significant infrastructure—may need more substantial initial investment before user validation is possible. A new medical device requires FDA approval before patient testing; a payment processing system needs robust security from day one. Even in these contexts, however, MVP thinking can be applied creatively through prototypes, pilot programs, or phased rollouts to validated subsets of functionality before full-scale launch.

When NOT to Use a Pure MVP Approach

Be cautious with MVPs when:

- Brand reputation is at stake: Launching a subpar product in crowded markets can damage brand perception permanently

- Competitors can fast-follow: Validating an idea publicly may educate competitors who can quickly copy your approach with more resources

- Regulatory requirements are stringent: Industries like healthcare, finance, or aviation may require substantial compliance before any user interaction

- Market timing windows are narrow: Some opportunities require a more complete offering to capture critical momentum

The danger in all these misconceptions lies in the "minimum" mindset without the "viable." Teams either build too little (shipping something that can't genuinely test the hypothesis) or too much (adding features beyond what's needed for validation). The discipline lies in identifying that sweet spot where minimum meets viable.

Building an Effective MVP in Agile Teams

Implementing MVP thinking within Agile teams requires a structured, cyclical approach. These steps aren't linear—you'll cycle through them repeatedly, with each iteration informing the next.

Step 1: Identify the core problem and target user. Before writing a single line of code, crystallize exactly what problem you're solving and for whom. This sounds obvious, but teams frequently skip this step, building solutions in search of problems. Document your hypothesis clearly: "We believe [specific user segment] experiences [specific problem], and they would value [specific solution]." For example, "We believe small business owners struggle with manual invoice tracking and would pay for automated reconciliation." This clarity guides every subsequent decision.

Step 2: Define success metrics before building. How will you know if your MVP succeeds? Establish concrete, measurable criteria before launch.

Sample Success Metrics Framework

Without predetermined metrics, teams tend to rationalize disappointing results rather than learning from them.

Step 3: Prioritize features ruthlessly. This is where most teams struggle. List every feature you consider important, then use a prioritization framework to separate essential from nice-to-have.

MoSCoW Prioritization Example: Invoice Management App

Must-Have (MVP Release):

- User authentication and account creation

- Manual invoice entry (basic fields: amount, date, vendor, status)

- Invoice list view with status filters (paid/unpaid/overdue)

- Mark invoice as paid/unpaid

- Basic dashboard showing total unpaid amount

Should-Have (Post-MVP, validated by user feedback):

- PDF invoice upload with OCR text extraction

- Automatic payment reminders

- Multi-currency support

- Export to CSV/Excel

Could-Have (Future iterations if demand exists):

- Integration with accounting software (QuickBooks, Xero)

- Bulk invoice import

- Custom reporting and analytics

- Mobile app

Won't-Have (Not aligned with core hypothesis):

- Full accounting features (general ledger, balance sheet)

- Payroll management

- Inventory tracking

Your MVP includes only the Must-haves—features absolutely necessary to test your core hypothesis. Everything else gets deferred to future iterations based on learning. A common test: if removing a feature would make it impossible to validate your hypothesis, it's a Must-have. If removal would simply make the product less impressive, it's not.

Step 4: Build with quality but strategic scope. This is where "minimum" meets "viable." Engineer your MVP with production-quality code for the features you include, but be ruthless about limiting scope. Choose technologies that enable rapid iteration. Accept technical debt strategically in areas unlikely to affect core validation, but invest in quality for critical path functionality. If you're testing whether users want automated invoice reconciliation, the reconciliation engine must be robust, but the reporting dashboard can be basic.

Step 5: Deploy and measure. Launch to a targeted user segment—early adopters willing to tolerate limited functionality in exchange for solving a pressing problem. Instrument your MVP thoroughly to capture both quantitative data (usage metrics, conversion rates) and qualitative feedback (user interviews, support tickets, survey responses). Modern analytics tools make this straightforward, but the key is knowing what to measure (reference your Step 2 metrics).

Step 6: Learn and iterate. Analyze your data against predetermined success criteria. Did users engage as hypothesized? What unexpected behaviors emerged? What feedback patterns surfaced? Based on this learning, decide whether to persevere with enhancements, pivot to a different approach, or stop entirely. This decision-making moment is where MVP discipline pays dividends—you have real data, not opinions, guiding your next sprint planning.

MVP Experiment Log Template

Remember: This framework is cyclical, not linear. Each iteration through these steps generates learning that informs your next cycle. The discipline lies in maintaining focus on learning rather than building for its own sake.

Integrating MVP with Agile Practices

MVP thinking doesn't replace Agile practices—it enhances them, providing strategic direction for tactical execution.

Sprint planning transforms when filtered through an MVP lens. Rather than filling sprints with features from a predetermined roadmap, teams ask: "What's the most important thing we need to learn next?" This question drives sprint goals toward validation rather than implementation. User stories become testable hypotheses. Instead of "As a user, I want to filter search results," you write "As a small business owner, I want to filter invoices by status so I can quickly identify unpaid items." The acceptance criteria then include both functional requirements and learning goals.

User stories and acceptance criteria for MVP development should explicitly state what you're testing. A well-crafted MVP user story includes the standard format (As a [user], I want [feature] so that [benefit]), but adds validation criteria: "We'll know this is valuable if [measurable outcome]." This keeps teams focused on why they're building, not just what they're building.

Balancing technical debt with rapid iteration requires judgment and communication. Some technical debt is acceptable when launching an MVP—you're testing whether anyone wants the product before optimizing its architecture. However, debt that impedes learning or future iteration is dangerous. Establish clear guidelines: take shortcuts in presentation or convenience features, but invest properly in data collection, core functionality, and architectural decisions that would be expensive to change later.

Stakeholder communication and expectation management becomes critical when MVP scope appears limited. Executives and stakeholders often expect polish and completeness. Your role is educating them on the strategic value of learning before building. Frame the MVP as a de-risking investment: "Before we commit $500K to building the full vision, let's invest $50K to validate the core assumptions. If we're right, we'll scale with confidence. If we're wrong, we've saved $450K and gained invaluable market intelligence." Most stakeholders appreciate this fiscal responsibility when properly communicated.

The product owner's role in MVP development is particularly crucial. They must champion learning over features, defend scope limitations against feature creep, and translate user feedback into actionable sprint priorities. An effective product owner maintains a vision of the ultimate product while having the discipline to build it incrementally based on validated learning. They're comfortable with ambiguity, data-driven in decision-making, and skilled at stakeholder negotiation when pressure mounts to add "just one more feature" before launch.

Conclusion

The integration of MVP thinking with Agile methodology represents more than tactical efficiency—it's a strategic imperative for organizations competing in markets where customer needs evolve rapidly. Teams that embrace this approach don't just build faster; they build smarter, channeling resources toward validated opportunities rather than speculative features.

The execution is challenging. It requires discipline to ship products that feel incomplete, courage to face real market feedback, and organizational maturity to view "failed" MVPs as successful learning. But the alternative—building elaborate products based on untested assumptions—carries far greater risk.

Questions to Assess Your Current Approach

Are we building to learn, or building to launch?

Are our sprints driven by validated priorities or predetermined roadmaps?

Do we have concrete success metrics defined before development starts?

Are we comfortable releasing products that test hypotheses rather than showcase capabilities?

Have we identified which of our assumptions are riskiest and deserve testing first?

Your answers will reveal whether you're truly leveraging the MVP advantage or simply paying lip service to Agile transformation. The future belongs to adaptive organizations that treat building as experiments and products as ongoing conversations with customers.

Key Takeaways

- MVP is strategic validation, not just fast delivery - Focus on learning what customers need before heavy investment

- Attribute foundational concepts - The MVP approach was popularized by Eric Ries in The Lean Startup

- "Minimum" refers to scope, not quality - Build fewer features excellently rather than many features poorly

- Define success metrics before building - Use concrete targets: 40%+ activation, 30%+ engagement, 20%+ retention

- Ruthlessly prioritize using MoSCoW - Only Must-have features make it into the MVP; everything else waits

- Think cyclically, not linearly - The build-measure-learn cycle repeats with each iteration

- Know when NOT to use MVP - Brand-sensitive markets, highly regulated industries, or narrow timing windows may require different approachesCombine quantitative and qualitative feedback - Metrics show what happens; interviews reveal why

References

- Kosner, A. (2011). "Dropbox: The Inside Story Of Tech's Hottest Startup." TechCrunch. Retrieved from https://techcrunch.com/2011/10/19/dropbox-minimal-viable-product/

- Yarow, J. (2011). "The Story Of How Instagram Got Started." Business Insider. Retrieved from https://www.businessinsider.com/how-instagram-was-founded-2011-4

Further Reading

- Ries, E. (2011). The Lean Startup: How Today's Entrepreneurs Use Continuous Innovation to Create Radically Successful Businesses. Crown Business.

Comments

Post a Comment